Politics

/

November 3, 2025

A faked viral video of a white CEO shoplifting is one thing. What happens when an AI-generated video incriminates a Black suspect? That’s coming, and we’re completely unprepared.

An AI-generated video on the platform Sora purports to depict Open AI CEO Sam Altman shoplifting from Target.

(Via x.com)

Last month, social media was flooded by a CCTV clip of Sam Altman, the CEO of OpenAI, stealing from Target and getting stopped by a store security guard. Except he wasn’t, really—that was just the first clip to go viral from Sora, Open AI’s new social media platform of AI video, which is to say, an app created solely so people can make, post, and remix deepfakes. Sora isn’t the first app that lets people create phony videos of themselves and others, though the realism of its output is groundbreaking. Still, it’s all harmless, satirical fun when the subject is a white tech billionaire who—even with hyperrealistic video of the crime—no one believes would ever commit petty theft.

But the disturbing implications of this technology are clear as soon as you consider that AI can be used just as easily to make deepfakes that incriminate the poor, the marginalized, and the already over-policed—folks for whom guilt is the default conclusion with the flimsiest evidence. What happens when racist police, convinced as they so often are of a suspect’s wrongdoing based solely on their evidence of their Blackness, are presented with AI-generated video “proof”? What about when law enforcement officials, who are already legally permitted to use faked incriminating evidence to dupe suspects into confessing—real-life examples have included forged DNA lab reports, phony polygraph test results, and falsified fingerprint “matches”—start regularly using AI to manufacture “incontrovertible evidence” for the same? How long until, as legal scholars Hillary B. Farber and Anoo D. Vyasin suggest, “the police show a suspect a deepfaked video of a witness who claims to have seen the suspect commit the crime, or a deepfaked video of ‘an accomplice’ who confesses to the crime and simultaneously implicates the suspect”? Or, as Wake Forest law professor Wayne A. Logan queries, until law enforcement starts regularly showing innocent-but-assumed-guilty suspects deepfaked “video falsely indicating their presence at a crime scene”? “It is inevitable that this type of police fabrication will enter the interrogation room,” Farber and Vyasin conclude in a recent paper, “if it has not already.”

We should also assume that means it’s only a matter of time before law enforcement—who lie so often under oath that the term “testilying” exists specifically to describe police perjury—begin creating and planting that evidence on innocent Black people. This isn’t paranoia. The criminal justice system already disproportionately railroads Black folks, who make up just 13.6 percent of the US population but account for nearly 60 percent of those exonerated since 1992 by the Innocence Project. What’s more, almost 60 percent of Black exonerees were wrongly convicted thanks to police and other officials’ misconduct (compared to just 52 percent for white exonerees). The numbers are even more appalling when it comes to wrongful convictions for murder, with a 2022 report finding Black people are nearly eight times more likely to be wrongly convicted of murder than white people. Official misconduct helped wrongfully convict 78 percent of Black folks who are exonerated for murder, versus 64 percent of white defendants. In death penalty cases, misconduct was a factor in 87 percent of cases with Black defendants, compared to 68 percent of cases with white defendants. The Innocence Project has found that almost one in four people it has freed since 1989 had pleaded guilty to crimes they didn’t commit—and that the vast majority of those, about 75 percent, were Black or brown. Imagine how those numbers will look when you add AI to the tricks of the coercive trade.

Sora, and the other AI slop factories that represent its major competitors, including Vibes from Meta (ex-Facebook) and Veo 3 by Google, claim to have ways to prevent this kind of misuse. All of those companies are also part of the Coalition for Content Provenance and Authenticity (C2PA), which develops technical standards meant to verify the provenance and authenticity of digital media. In keeping with those standards, OpenAI has pointed out that Sora embeds metadata in every video, along with a visible “Sora” watermark that bounces around the frame to make removal harder. But it’s clear that it’s not enough. Predictably, within roughly a day of Sora’s launch, watermark removers were being advertised online and shared across social media. And some people started adding Sora watermarks to perfectly real videos.

Other controversy has followed. Following demands for “immediate and decisive action” against copyright violations by the Motion Picture Association and a host of other corporate behemoths with massive legal teams, OpenAI has added “semantic guardrails”—preventing the ability of certain terms to be translated into images. That includes prohibiting image generation of living celebrities and other trademarked figures, and specifically blocking videos of Martin Luther King Jr.’s likeness. (OpenAI is already fighting lawsuits—by plaintiffs including The New York Times and authors Ta-Nehisi Coates, John Grisham and George R.R. Martin—charging that its AI chatbot ChatGPT regurgitates copyrighted books—with 50 similar cases now pending against generative-AI firms in courts across the United States.) Hany Farid, a professor of computer science at UC Berkeley who is often dubbed the “Father of Digital Forensics,” pointed out to me that additional safeguards exist, including Google’s SynthID and Adobe’s TrustMark, which function as invisible watermarks. But users hell-bent on misuse will find a way.

“Let’s say OpenAI did everything right. They added metadata. They added visible watermarks. They added invisible watermarks. They had really good semantic guardrails. They made it really hard to jailbreak. The truth is, it doesn’t matter, because somebody is going to come along and make a bad version of this, where you can do whatever you want. And in this space, we’re only as good as the lowest common denominator.”

Farid pointed to Grok, the AI chatbot and image-generator owned by Elon Musk, as an example of what happens when that lowest common denominator rules. A complete lack of restriction allowed the app to spew disinformation ahead of the 2024 election, and to create nonconsensual sexually explicit imagery involving real people, both famous and unknown. This summer, the Rape, Abuse & Incest National Network issued a statement warning that the app “will lead to sexual abuse.”

“At the end of the day, once you’re in the business of doing what OpenAI is doing [with] Sora or Google’s Veo or ElevenLabs voice cloning, you’re opening Pandora’s box. And you can put as many guardrails as you want—and I’m hoping that people ultimately put up better guardrails. But at the end of the day, your technology is going to be jailbroken. It’s going to be misused. And it’s going to lead to problems.”

Studies find that AI is 80 percent more likely to reject Black mortgage applicants than white ones; that it discriminates against women and Black people in hiring; and that it offers erroneously negative profiles of Black rental applicants to landlords—racist practices known as “algorithmic redlining.” Likewise, AI has contributed to racial disparities in criminal justice by replicating pervasive cultural notions about Blackness and criminality being intertwined. A facial recognition system once included a photograph of Michael B. Jordan, the internationally famous Black American movie star, in a lineup of suspected gunmen following a 2021 mass shooting in Brazil. As of this August, at least 10 people—nearly all of them Black men and women—have been wrongfully arrested because they were misidentified by facial recognition. A ProPublica investigation from nearly a decade ago warned that AI risk assessment software used by courts to decide sentencing lengths was “particularly likely to falsely flag Black defendants as future criminals, wrongly labeling them this way at almost twice the rate as white defendants.”

Just a couple of weeks ago, an AI gun detection system in a Baltimore, Maryland, high school misidentified a Black student’s bag of Doritos for a weapon and alerted police, who were then dispatched to the school. Roughly 20 minutes later, “police officers arrived with guns drawn,” according to local NBC affiliate WBAL, made the student get on the ground, and then handcuffed him. “The first thing I was wondering was, was I about to die? Because they had a gun pointed at me. I was scared.” Taki Allen, the 16-year old student, told the outlet. Knowing that police often take a shoot-now, ask later approach—especially with dealing with young Black men—a teen boy could well have ended up dead because of the overpolicing of a majority Black and brown high school, police primed to see Blackness as an inherent violent threat, and an AI’s faulty pattern recognition.

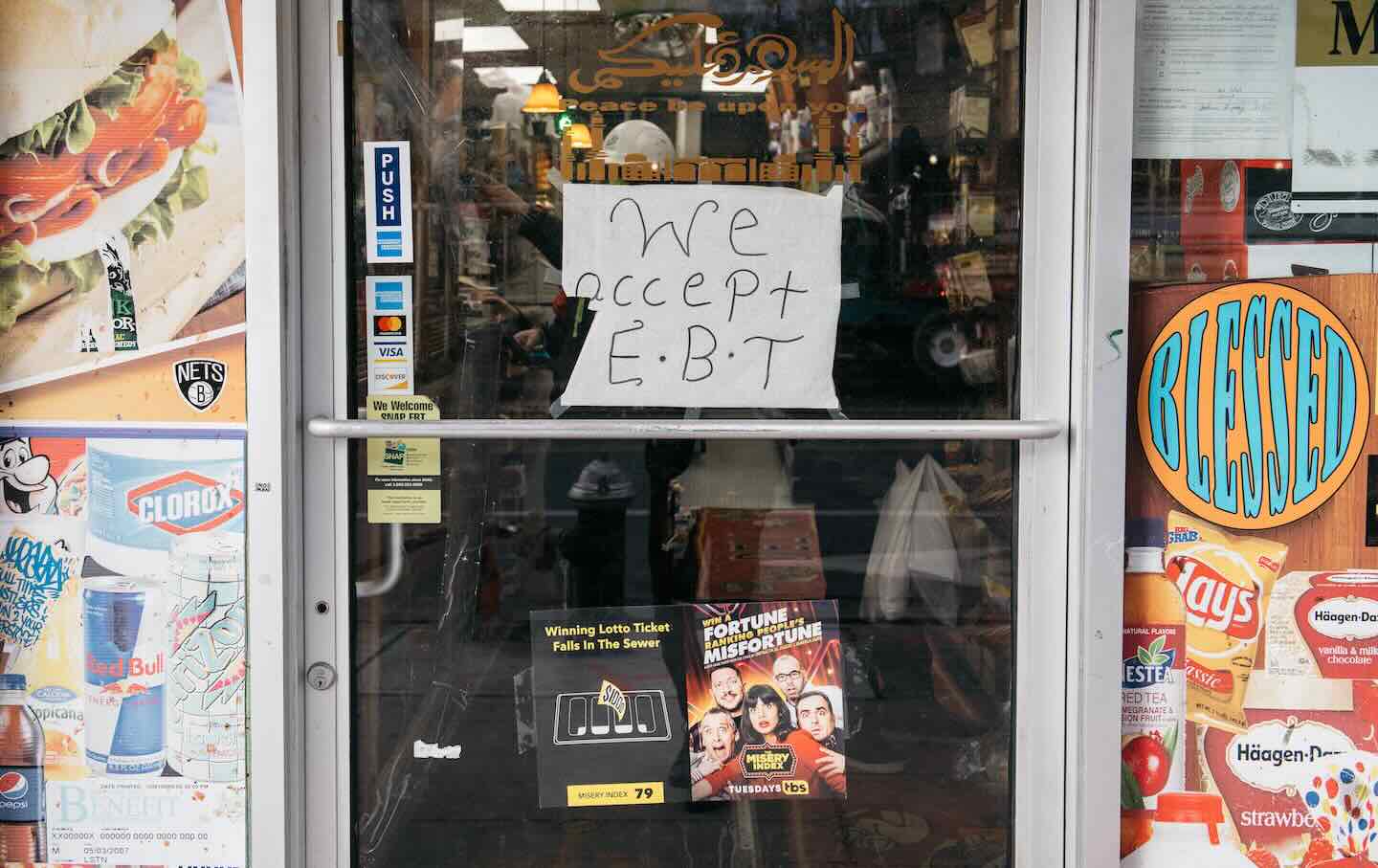

So often, the discussion about techno-racism is focused on racial bias that’s baked into algorithms—how new technologies unintentionally replicate the racism of their programmers. And AI has indeed proved that technology can inherit racism from its makers, and replicate it once out in the world. But we should also be considering the ways AI will almost certainly be intentionally weaponized and (mis)used. For example, a failed TikTok influencer with the handle impossible_asmr1 struck gold recently when they began using Sora to create deepfakes of nonexistent angry Black women trying to use EBT cards in situations where it would be patently ridiculous to do so, then crashing out when the cards are rejected—complete with long strings of expletives shouted directly to the camera. Just last week, Fox News was suckered by a batch of wildly racist AI videos, which the outlet reported on as fact, featuring Black women ranting about losing SNAP benefits during the shutdown. In a piece originally headlined “SNAP Beneficiaries Threaten to Ransack Stores Over Government Shutdown,” Fox News writer Alba Cuebas-Fantauzzi credulously reported that one woman—again, a completely fabricated AI generation depicted as real—said, “I have seven different baby daddies and none of ’em no good for me.” (After both mockery and outcry across social media, the outlet changed the headline to “AI videos of SNAP beneficiaries complaining about cuts go viral” but didn’t admit its mistake.) Obviously, a not-insignificant part of the problem is that Fox News thought this was a story worth covering at all, because it is an outlet that treats race-baiting as central to its mission. But the ease with which deepfakes will now help them in that mission is also a pretty big concern. Of course, using anti-Black images taken not from real life but from the racist white imagination to create ragebait that encourages yet more anti-Black racism is an American tradition—from minstrel shows to Birth of a Nation to books with illustrations of lazy Sambos.

It’s scary enough when a media outlet is airing faked racist videos. But this is the sort of “evidence” that the Trump administration—operating as an openly racist regime, indifferent to civil rights, and clear about its eagerness to use local problems as justifications for a nationalized police state—could use to launch military incursions into American cities or disappear American citizens. Following several executive orders that encourage more police authority and less accountability, in September, Trump signed a directive (“Countering Domestic Terrorism and Organized Political Violence” or NPSM-7) that explicitly claims threats to national security includes expressions of “anti-American,” “anti-Christian” and “anti-capitalist” sentiments—language so intentionally vague that any dissent can be labeled left-wing terrorism. When a government claims that all “activities under the umbrella of self-described “anti-fascism”” are considered “pre-crime” indicators, it is signaling its readiness to use fabricated evidence as proof. Trust that the same Homeland Security apparatus that photoshopped, poorly, gang tattoos onto the fingers of Kilmar Ábrego García will be manufacturing guilt in no time.

And there are other, perhaps less obvious ways in which the most vulnerable will be disadvantaged. Riana Pfefferkorn, a policy fellow at Stanford’s Institute for Human-Centered Artificial Intelligence, recently conducted a study to learn about what kinds of lawyers had gotten caught submitting briefs with “hallucinations,” the term used to describe AI’s tendency to create citations that reference nonexistent sources, like those that filled a recent “scientific” report from Health and Human Services head Robert F. Kennedy Jr. She found the most hallucinations in briefs from small firms or solo practices, meaning attorneys who are likely stretched thinner than those at white-shoe firms, which have staff to catch AI mistakes.

“It connects back to my fear that the people with the fewest resources will be most affected by the downsides of AI,” Pfefferkorn said to me. “Overworked public defenders and criminal defense attorneys, or indigent people representing themselves in civil court—they won’t have the resources to tell real from fake. Or to call on experts who can help determine what evidence is and isn’t authentic.”

Now that reality itself can be faked, not only will we see that fakery used to criminally frame Black Americans and other vulnerable populations but, authorities will almost certainly claim that real footage exonerating them is faked. University of Colorado Associate Professor Sandra Ristovska, who looks at the ways visual imagery impacts social justice and human rights, warns that this is likely already happening in courtrooms.

“The more people know that you can make a fake video of, like, the CEO of OpenAI stealing, the more likely we’ll see what my colleagues have named the ‘reverse CSI’ effect,” she told me, referring to a concept that, when used in specific relation to deepfakes, was first articulated in 2020 law paper by Pfefferkorn. “That means we’ll start seeing these unreasonably high standards for authentication of videos in court, so that even when an authentic video is admitted under the standards in our legal system, jurors may give little or no weight to it, because anything and everything can be dismissed as a deepfake. That’s a very real concern—how much deepfakes are potentially casting doubt on reliable, authentic footage.”

And those who benefit from presumptions of innocence will be able to exploit those same doubts and ambiguity for their own benefit—a phenomenon law professors Bobby Chesney and Danielle Citron call “the liar’s dividend.” In their 2018 law paper introducing the term, the legal scholars warned that pervasive cries of “fake news” and distrust of truth would empower liars to claim real evidence as false. Among the first known litigants to invoke what’s now known as the “deepfake defense” are Elon Musk and two January 6 defendants, Guy Reffit and Josh Doolin. Although their claims failed in court, privileged litigants stand to gain from the same confusion that’s weaponized against marginalized folks.

All this, of course, has clear implications for police accountability. Not only will fake footage be used against people; it will likely be deployed to justify racialized state violence. “Think about the George Floyd video,” Farid noted to me. “At the time—with the exception of a few wackos—the conversation wasn’t, ‘Is this real or not?’ We could all see what happened. If that video came out today, people would be saying, “Oh, that video is fake.” Suddenly every video—body cam, CCTV, somebody filming human rights violations in Gaza or Ukraine—is suspect.

Rebecca Delfino, an associate professor of law at Loyola University who also advises judges on AI, shared similar concerns. “Deepfakes are extra challenging because it’s not just a false video, it’s basically a manufactured witness,” she told me. “It risks due process and racial justice, and the risks are profound. The weaponization of synthetic media does not just distort facts. It erodes trust in institutions charged with finding them.”

Popular

“swipe left below to view more authors”Swipe →

As it stands, our entire legal system is unprepared to deal with the scale of fakery that deepfakes make possible. (“The law always lags behind technology,” Delfino told me. “We’re at a really dangerous tipping point in terms of having these rules that were written for an analog world—but we’re not there anymore.”) Rule 901 of the Federal Rules of Evidence is focused on authentication, requiring that all discovery—documents, videos, photographs, etc.—be verified before admission into evidence. But if jurors think there’s any possibility a video could be fake, the damage is done. Delfino says that in open court, the allegation that an evidentiary video is a deepfake would be “so prejudicial and so dangerous because a jury cannot unhear it—you can’t unring that bell.”

The federal courts’ Advisory Committee on Evidence Rules has been considering amendments to Rule 901, largely in response to AI, for roughly two years. Delfino’s proposal posits that judges should settle the question in a pretrial hearing, in consultation with experts as needed, before proceeding with a case. The goal is to ensure that juries understand that any video allowed to be entered into evidence has already cleared a threshold of authenticity, to rule out speculations of inauthenticity that could arise during a trial; under Delfino’s proposal, the judge would serve as a gatekeeper, leaving juries to continue treating all evidence with an assurance of authenticity. Following deliberations in May of this year, the committee declined to amend the Rule, citing “the limited instances of deepfakes in the courtroom to date.” That is disheartening, but she suggests this might be a chicken-vs.-egg issue, since the committee is currently taking public comment on another rule (702) about applying the same scientific standards it puts on to deepfakes and AI evidence in general. “To my mind, that’s a good step, and an important one,” she told me. “Before this type of evidence is offered, the same way we treat DNA, blood evidence, or X-rays, it needs to meet the threshold of scientific reliability and verifiability.”

That said, Ristovska worries about how AI will compound existing problems in the courtroom. Nearly every criminal case, whopping 80 percent, according to the Bureau of Justice Assistance, includes video evidence. “Yet our court system, from state courts to federal courts to the Supreme Court, still lacks clear guidelines for how to use video as evidence,” she told me.

“Now, with AI and deepfakes, we’re adding another layer of complexity to a system that hasn’t even fully addressed the challenges of authentic video,” Ristovska added. “Factors like cognitive bias, technology, and social context all shape how people see and interpret video. Playing footage in slow motion versus real time, or showing body camera versus dashboard camera video, can influence jurors’ judgments about intent. These factors disproportionately affect people of color in a legal system already structured by racial and ethnic disparities.”

And there are still other concerns. In a 2021 article, Pfefferkorn was already calling for smartphones to include “verified at capture” technology to authenticate pics and video at the moment of creation, and to determine if they were later tampered with or altered. Perhaps we’re near a deepfake tipping point where those will become a part of our phones, but that fix still isn’t here yet. Delfino told me she thought that the fallout from unregulated Grok output—which included many famous white women in nonconsensual, and therefore dangerous, deepfakes—would do more to increase the urgency around AI authentication needs. Unfortunately, as she noted, we’re likely looking at a situation where the system will drag its feet until more rich and powerful people experience deepfakes’ harms. And Ristovska followed up with a message noting that, while there is a human rights need for robust provenance technologies at the point of capture, it’s also important that those same technologies not imperil the anonymity of “whistleblowers, witnesses, and others whose lives could be endangered if their identities are disclosed.”

Elsewhere and in the meantime, the most vulnerable remain so. AI has not been a friend to Black Americans, and deepfakes threaten to make the future pursuit of justice, already a far from complete struggle, even bleaker. It seems that every new technology promises progress, but is first weaponized against the same people, from fingerprinting used in service of racist pseudoscience and coerced confessions, to predictive-policing algorithms that disproportionately surveil Black neighborhoods. Deepfakes will almost certainly continue that history. Video was once a means for vindication, shutting down official lies. But the cellphone revolution, it seems, has been surprisingly short-lived. I fear a future in which the very means of exonerating the innocent will be refashioned as tools for harassment, framing, and systemic injustice.

More from The Nation

The director’s vision of New York City once seemed aspirational, but his endorsement of Andrew Cuomo suggests he may not understand the city beyond its fiction.

Stephanie Wambugu

I warned that Trump and congressional Republicans could use a shutdown to deny SNAP benefits, but was told there was no way our political system would allow that to occur.

Joel Berg

The Times of London failed to meet even basic journalistic standards. We should demand better from the media.

Bill de Blasio

The Vancouver duo behind the Drug User Liberation Front faces 40 years behind bars for drug trafficking. But this is no ordinary case.

Carl L. Hart